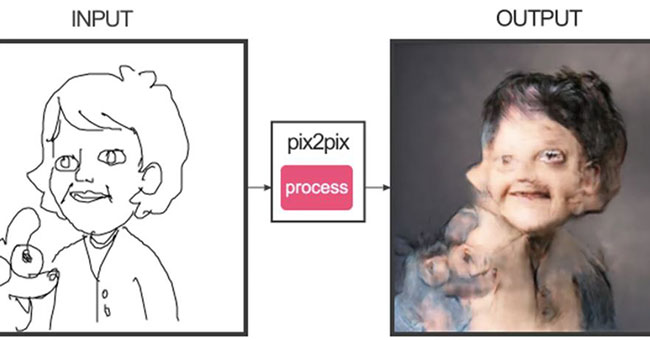

For customizing models and datasets, look through our templates. To help users understand and improve our codebase we offer a quick overview of the structure of the repository. Below are a few examples of output created by the pix2pix GCN after training for 200 epochs using the data set of façades. The models that have been trained are accessible on the Datasets section of GitHub. The models that were released along with the original pix2pix implementation will be accessible. The models used for the javascript implementation are available at pix2pix-TensorFlow-models. Get More Softwares From Getintopc

Pix2pix

Password 123

Excellent explanation from Christopher Hesse, also documenting his TensorFlow port to our code. Karoly Zsolnai-Feher wrote the above in his extremely impressive “Two-minute documents” series. To Docker customers, we offer the already-built Docker image as well as Dockerfile. The laws regarding how to use this program differ from country to country. We do not advocate or support any use of this software when it is in violation of the laws.

Pix2pix Features

Within Colab you can select additional datasets in the menu dropdown. It is important to note that some of the datasets are considerably larger. Bertrand Gondolin trained our method to translate sketches into Pokemon to create sketching tools that are interactive. Nono Martinez Alonso employed pix2pix in his master’s thesis on collaboration between humans and AI to design. Different models of pix2pix are utilized to aid humans to design, in which they “suggest” enhancements, refinements, and completes of the designer’s sketch. Scott Eaton uses a customized version of pix2pix to serve as an aid in his art such as developing a net to translate sketches and strokes into 3D renderings. The above sculpture is a real bronze inspired by one of Scott’s designs that he translated.

We have provided Python and Matlab scripts for extracting rough edges from images. Run scripts/edges/batch_hed.py to compute HED edges. The auto-detected edges don’t seem to be adequate and, in many cases, didn’t see the eyes of the cat and this makes it more difficult to train the model of image translation.

Recently, I created a Tensorflow pix2pix port by Isola et al., which is covered in the article Image to Image Translation using Tensorflow. I’ve taken some models already trained and built an interactive web application to try them out. For pix2pix and your personal models, you must explicitly specify –net, –norm, and –no_dropout in order to match the generator’s structure of the learned model.

Clipping input data within the range that is valid for imshow using RGB data ([0..1for floats, and [0..255or [0..255] with integers). Mario Klingemann used our code to translate the appearance of French singer Francoise Hardy into Kellyanne Conway’s notorious “alternative fact” interview. The interesting articles on the interview can be found here and here.

It’s unclear the best way to utilize such an empty space, however, when you place enough windows there, the results are usually acceptable. Download a pre-trained model with ./scripts/download_pix2pix_model.sh.

How to Get pix2pix for Free

CleanIt’s highly likely that this software program is free of contamination. The generated image is able to have a structural resemblance to the image that is intended for. Then, a pix2pix model converts the pantomime into renderings of the objects that are imagined. If you’re in an office or sharing a network, you can request the administrator of your network to conduct a scan of the network to find out if there are any misconfigured or affected devices. You can see the results of the previous session of this notebook on TensorBoard.dev. Calculate the losses gradients relative to each generator as well as the discriminator variables, and apply them to the optimizer.

Because this tutorial is able to run with more than one dataset and the data sets vary vastly in size, the training loop is designed to run by steps instead of intervals. Certain images appear particularly creepy because it’s much easier to tell the signs that an animal is not looking right particularly around the eyes.

If you’d prefer to apply a trained model to a set of images, you can select the –model test option. See ./scripts/test_single.sh for how to apply a model to Facade label maps (stored in the directory facades/test). To be brief it is recommended to use an unprocessed copy of this data set created by the authors of pix2pix. Download the models that have been trained using this script. You need to rename the model (e.g., facades_label2image to /checkpoints/facades/latest_net_G.t7) after the download has finished. You can find helpful tips for training and testing in commonly asked queries.

This cat was created in the hands of Vitaly Vidmirov (@vvid). To test and train pix2pix colorization models, you must add the –model colorization as well as the dataset mode colorization. If the disc_loss is lower than 0.69 values, any value lower than 0.69 indicates that the discriminator is performing better than random with the set of generated and real images. You must separate the real facades from the architecture label images — all of which are of dimension 256×256. The training was based on a database of building facades, you can label the facades of buildings.

Pix2pix System Requirements

- Operating System: Windows XP/Vista/7/8/8.1/10

- RAM: 512 MB

- Hard Disk: 50 MB

- Processor: Intel Dual Core or higher processor